Searching Hyperparameters?

Hyperparameters can be thought of as a parameter whose value is used to control the learning process. In Machine Learning model training we require different constraints, weights or learning rates to generalize different data patterns but finding the right set of these optimal parameters for solving the machine learning problem can be a challenging and tedious task. Hyperparameter optimization finds a tuple of hyperparameters that yields an optimal model which minimizes a predefined loss function on given independent data.

In this tutorial, we will get to know different hyperparameter optimization algorithms that we are generally using in Machine Learning to reduce the time taken in finding the right parameters.

Different Hyperparameters

- Learning Rate: This parameter defines how fast your model learns.

2. Dropout Probability: Dropout is a regularization technique that randomly sets activations in a neural network to 0 with a probability of ‘p’. This helps prevent neural nets from overfitting (memorizing) the data as opposed to learning it. ‘p’ is a hyperparameter.

3. Momentum: Momentum methods in the context of machine learning refer to a group of tricks and techniques designed to speed up the convergence of first-order optimization methods like gradient descent (and its many variants).

Optimization Algorithms

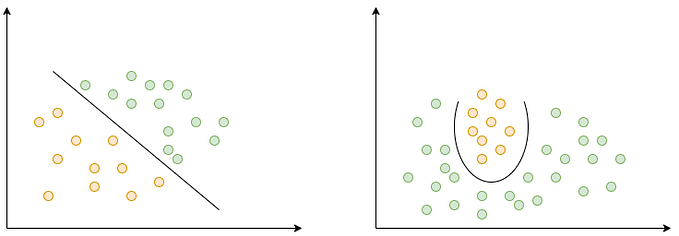

The traditional way of performing hyperparameter optimization has been grid search, or a parameter sweep, which is simply an exhaustive searching through a manually specified subset of the hyperparameter space of a learning algorithm.

Advantages: Simple and easy to implement and understand. Can be easily parallelized.

Disadvantages: Not efficient when the number of parameters is large and requires high computation power.

Should we use it? : Probably not. It is terribly inefficient. Even if you want to keep it simple, you’re better off using random search.

Implementation:

lrs = [1e-1, 1e-2, 1e-3]

wds = [1e-1, 1e-2, 1e-3]

dps = [0.1, 0.3, 0.5]max_acc = 0

for lr in lrs:

for wd in wds:

for dp in dps:

current_accuracy, model = fit_with(lr,wd,dp)

if (current_accuracy > max_acc):

model.save('/home/grid_search_best_model')

max_acc = current_accuracyprint("The highest accuracy is: " + str(max_acc*100))Random search is a technique where random combinations of the hyperparameters are used to find the best solution for the built model. In contrast to Grid Search, not all parameter values are tried out, but rather a fixed number of parameter settings is sampled from the specified distributions.

Advantages: It is similar to grid search, and yet it has proven to yield better results comparatively.

Disadvantages: It yields a high variance during computing. Time Consuming.

Should we use it? : If trivial parallelism and ease are paramount, go for it. But if you’ll be able to spare time and energy, the implementation of Bayesian Optimization will reward you plenty of your time.

Implementation:

import random

max_acc = 0

# The number of different hyperparameter values to try

num_iters = 10for iteration in range(num_iters):

# Pick random hyperparameters in a specified range

lr = 10**(-random.randint(3,7))

wd = 10**(-random.randint(3,7))

dp = random.uniform(0.1,0.5) current_accuracy, model = fit_with(lr,wd,dp)

if (current_accuracy > max_acc):

model.save('/home/grid_search_best_model')

max_acc = current_accuracyprint("The highest accuracy is: " + str(max_acc*100))Unlike the other methods we’ve seen so far, Bayesian optimization uses knowledge of previous iterations of the algorithm. With Grid Search and Random Search, each hyperparameter guess is independent. But with Bayesian methods, each time we select and try out different hyperparameters, the inches toward perfection.

Bayesian Optimization provides a principled technique based on Bayes Theorem to direct a search for a global optimization problem that is efficient and effective. It works by building a probabilistic model of the objective function, called the surrogate function, that is then searched efficiently with an acquisition function before candidate samples are chosen for evaluation on the real objective function.

For more information about theoretical concepts on Bayesian methods, please follow this article.

Advantages: Perform better in terms of accuracy as compared to Grid Search and Random Search.

Disadvantages: It cannot be parallelized easily.

Should we use it? : Definitely, we should use it. But in some cases like when we have a huge amount of computational resources then we can parallelize the things in such cases we should prefer Grid Search or Random Search over Bayesian algorithm.

Implementation:

from bayes_opt import BayesianOptimization# Bounded region of parameter space

pbounds = {'lr': (1e-4, 1e-2), 'wd':(1e-4,0.4), 'dp':(0.1,0.5)}optimizer = BayesianOptimization(f=fit_with,

pbounds=pbounds,

verbose=2,

random_state=1,

)optimizer.maximize(init_points=2, n_iter=2,)for i, res in enumerate(optimizer.res):

print("Iteration {}: \n\t{}".format(i, res))print(optimizer.max)In Bayesian optimization, every next search values depend on previous observations(previous evaluation accuracies), the whole optimization process can be hard to be distributed or parallelized like the grid or random search methods.

Conclusion:

This quick tutorial introduces how to do hyperparameter search with different algorithms. On average, Bayesian optimization finds a better optimum in a smaller number of steps than Random Search & Grid Search and beats the baseline in almost every run. This also gives us insights to know which algorithm to use for a particular use case and reduce the tedious task of finding the optimal parameters.

The source code for this post is available on my Github account.

References:

- Official Scikit-Learn page.

- Official Bayesian Optimization page.

- Bayesian optimization by Martin Krasser.

- P.Galeone’s Blog on Dropout.

- Sebastian Raschka page on Learning Rate.